2024-11-12 Handling Failover with Repmgr and PgBouncer

Now that I have a "prototype" of PostgreSQL cluster with automatic failover using repmgr. I need to know how to access the database from an app, in particular, a Python webapp with SQLAlchemy. I'd like to explore the use of PgBouncer with repmgr for this.

My goal, at least for now, is not to have a fully HA pipeline, but a webapp accessing an HA-enhanced database without introducing a lot of complexity. After some investigation, I choose add PgBouncer into the mixture, by letting the webapp connect to PgBouncer, and PgBouncer sync with the PostgreSQL cluster maintained by repmgr. There are many resources on the web, old or new, about this topic, for example this and this.

PgBouncer

Let's get started. Check the official PgBouncer website to see the latest info, which has no information regrading Docker installation. But it is easily enough to search the web for examples. So,

mkdir pgbouncer

cd pgbouncerCreate the docker-compose.yaml file:

networks:

mynet:

name: mynet

external: true

services:

pgbouncer:

restart: unless-stopped

container_name: pgbouncer

image: edoburu/pgbouncer:latest

environment:

- DB_USER=postgres

- DB_PASSWORD=...

- DB_HOST=192.168.111.1

- DB_PORT=3106

- AUTH_TYPE=scram-sha-256

- POOL_MODE=transaction

- ADMIN_USERS=postgres

ports:

- "192.168.111.1:5432:5432"

networks:

- mynet

healthcheck:

test: ['CMD', 'pg_isready', '-h', 'localhost']We assume the PostgreSQL cluster has been up and running, with its own docker-compose.yaml (so that it can be started/stoped independently from PgBouncer here). Furthermore, these databases are using the port other than 5432, which is used by PgBouncer here. It is also expected that all applications access the database in the Nebula network 192.168.111.0/24.

After starting the container:

docker-compose up -dwe can connect to PgBouncer in the same way we connect to the PostgreSQL databases before:

$ psql -h 192.168.111.1 -U postgres

Password for user postgres:

psql (16.4 (Debian 16.4-1.pgdg120+2))

Type "help" for help.

postgres=# \qAfter connecting successfully, you should spend time dealing with theDB_PASSWORDnow. In fact, PgBouncer creates configuration files in/etc/pgbouncer, includingpgbouncer.inianduserlist.txt, the latter containing the password given by theDB_PASSWORDenvironment variable. For future "run-time" configuration, we should bind a local folder with/etc/pgbouncer, but probably have to run the container as root once to set permissions right first. ThenDB_PASSWORDcan be removed, but the folder needs to be properly protected.

Since we have a brand new database right now, let's take this opportunity to create a proper user noter and the database noterdb for it, and change the password for noter via psql:

$ docker-compose exec -it postgres-ha bash

root:/# su - postgres

postgres:~$ createuser noter

postgres:~$ createdb noterdb -O owner

postgres:~$ ^D

root:/# ^D

$ psql -h 192.168.111.1 -U postgres

Password for user postgres:

...

postgres=# \password noter

postgres-# ...Make sure we can connect using the newly created user and database, providing the password we specified:

psql -h 192.168.111.1 -U noter -d noterdbThe user and database will be used for the note taking app built earlier.

Manual Failover

Before diving into the task of making automatic failover work, let's first see how to do failover manually when the primary database goes off-line and a new primary is elected. A quick reference is here.

So on the primary node node1, cd into the folder for PostgreSQL container and turn off the database:

docker-compose downNow we cannot connect to the database via PgBouncer now:

psql -h 192.168.111.1 -U noter -d noterdbOn the server node2, look at the cluster status:

$ docker-compose exec -it postgres-ha bash

root:/# su postgres

postgres:~$ repmgr -f /etc/repmgr.conf cluster show

Wait for a while and see if a new primary has been elected. Suppose node2 with IP 192.168.111.2 is the new primary, we can manually switch the backend database to it for PgBouncer. To do this, on the node where PgBouncer container is running (i.e. node1) , shell into the container:

$ docker-compose exec -it pgbouncer sh

/ $First check the configuration file /etc/pgbouncer/pgbouncer.ini:

################## Auto generated ##################

[databases]

* = host=192.168.111.1 port=3106 auth_user=postgres

[bgbouncer]

...The content is auto generated based on the environment variables specified in docker-compose.yaml. We can change the host to 192.168.111.2 in the file and save it. To tell PgBouncer to reload the configuration dynamically, we can use psql to connect to PgBouncer's database and issue the RELOAD command:

/ $ psql -h 127.0.0.1 -U postgres

Password for user postgres:

psql (16.3, server 16.4 (Debian 16.4-1.pgdg120+2))

Type "help" for help.

postgres=# RELOADNow we can connect with the password to the database cluster via PgBouncer again:

psql -h 192.168.111.1 -U noter -d noterdbConnecting from SQLAlchemy

Let's change the note taking app built previously to access PgBouncer instead of the PostgreSQL server directly. Looking at the file app.py, the connection string for PostgreSQL was like:

postgresql://postgresql:mypass@192.168.x.x/noterdb

Now we can change the connection string:

app.config["SQLALCHEMY_DATABASE_URI"] = "postgresql://noter:xxx@192.168.111.1/noterdb"Suppose the primary database is on the machine 192.168.2.101 where our Python webapp also runs, in a local network different from Nebula's, we can launch the application:

$ flask run --host=0.0.0.0 --debug

* Debug mode: on

...

* Running on all addresses (0.0.0.0)

* Running on http://127.0.0.1:5000

* Running on http://192.168.2.101:5000

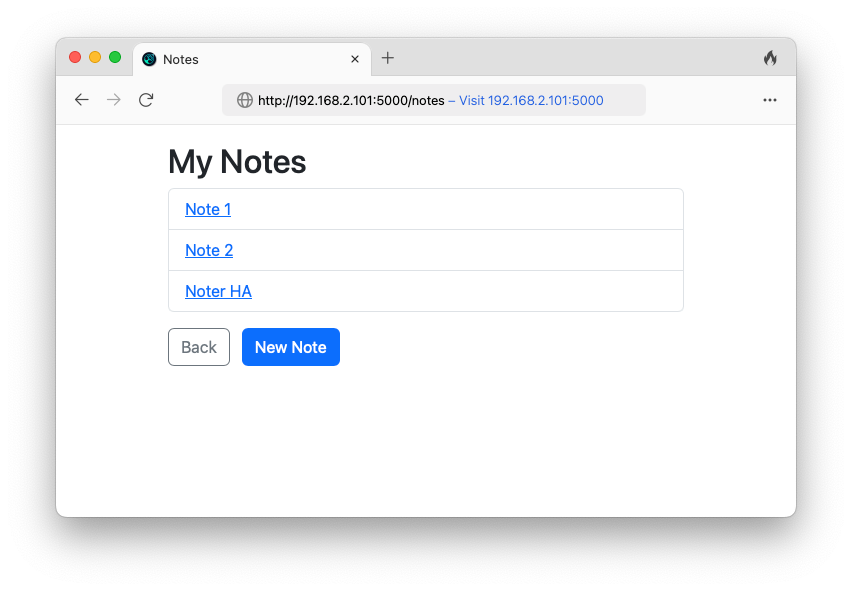

Press CTRL+C to quitAccess the webapp at http://192.168.2.101 and create some notes, and we have

Perform Failover Remotely

To achieve automatic failover with repmgr and PgBouncer, the general approach suggested from the web is for the new primary, as postgres user, to:

- compose a new configuration file

pgbouncer.inisomewhere locally - copy the file to remote server where PgBouncer is running, and replace its

pgbouncer.inifile, via rsync and passwordless SSH - also via SSH, use

psqlon the PgBouncer machine to issue theRELOADcommand (Supposedly ifpg_hba.confon the PgBouncer side also trustspostgresson the192.168.111.0/24network this command can also be run directly from the primary node).

So there are at least three tasks remaining:

- To enable passwordless SSH connectivity between all servers involved, from within each PostgreSQL container to remote machines,

- To generate proper

pgbouncer.inifrom the new primary database container based on the cluster status - To setup file and folder permissions correctly so that

repmgrfrom each PostgreSQL container can "manipulate" remote machines usingrsyncand SSH.

I haven't worked out all the details. These involve creating suitable a user and group, and binding more local folders to the containers involved, so that the above scheme works.

To Be Continued